Continuously Deploy a Hugo Site with GitLab CI

In case you hadn’t heard it on social media, The Polyglot Developer is part of a continuous integration (CI) and continuous deployment (CD) pipeline. Rather than using Hugo to manually build the site and then manually copying the files to a DigitalOcean VPS or similar, the Hugo changes are pushed to GitLab and GitLab takes care of the building and pushing.

Now you might be wondering why this is important because the process of manually building and pushing wasn’t so strenuous.

Having your web application as part of a CI / CD pipeline can streamline things that you would have otherwise needed to take into consideration. Here are some examples of where a pipeline would be of benefit, at least in the world of static website generation through tools like Hugo:

- Multiple authors and developers can work on the project without knowing sensitive information like SSH keys.

- Scheduled builds and deployments can be configured for content that is scheduled with a future date.

- Docker images can be automatically created and uploaded to a Docker registry.

Those are just some of the examples, more specifically how things are done on The Polyglot Developer. In this tutorial, we’re going to explore how The Polyglot Developer is doing things and how you can adopt them into your static website generation workflow.

When it comes to the continuous integration and continuous deployment process, there are a few steps involved. The setup can seem lengthy, but once it’s done, you can sit back and relax. The assumption is that you’ve already got a Hugo project and a GitLab account.

Creating the Website Build Script with Gulp

When it comes to Hugo, you could technically run the hugo command to build your project and then deploy the results. However, you might not end up with an optimized final product in terms of modern web standards. For example, the build process for The Polyglot Developer includes building the Hugo site, combining all the CSS files into a single bundle, minifying the HTML, JavaScript, and CSS, and generating pre-cached service workers. Again, not absolutely necessary, but these optimizations could go a long way when it comes to performance on the users computer or mobile phone.

In a previous tutorial, I explored in-depth, how to use Gulp for workflow automation. We’re going to see a small sub-section of that here.

At the root of your Hugo project, create a gulpfile.js file with the following:

var gulp = require("gulp");

var htmlmin = require("gulp-htmlmin");

var shell = require("gulp-shell");

gulp.task("hugo-build", shell.task(["hugo"]));

gulp.task("minify", () => {

return gulp.src(["public/**/*.html"])

.pipe(htmlmin({

collapseWhitespace: true,

minifyCSS: true,

minifyJS: true,

removeComments: true,

useShortDoctype: true,

}))

.pipe(gulp.dest("./public"));

});

gulp.task("build", gulp.series("hugo-build", "minify"));

This is of course a very minimal attempt at a Gulp workflow. Essentially, when someone or something executes npx gulp build, Gulp will run the hugo command with the command line, then take all the output HTML files and pipe them through a minification process.

To make sure the Hugo project includes the correct Gulp dependencies, execute the following:

npm init -y

npm install gulp gulp-htmlmin gulp-shell --save-dev

The Gulp workflow that you build should meet your needs, not mine. The point here is that we’re going to have GitLab CI do something a little more complicated than just run a standard hugo command before deployment.

Designing the GitLab CI Build and Deployment Scripts

With the Gulp workflows out of the way, now we can focus on the process of events that happen when we push our project to GitLab. To define what happens, we’ll need to create a .gitlab-ci.yml file at the root of our Hugo project. Create this file and add the following:

image: "gitlab/dind"

stages:

- build

- dockerize

- deploy

build:

stage: build

image: "node:alpine"

before_script:

-

script:

-

artifacts:

paths:

-

dockerize:

stage: dockerize

before_script:

-

script:

-

deploy:

image: "debian:buster"

stage: deploy

before_script:

-

script:

-

only:

- schedules

You’ll notice that I’ve stripped out a lot of stuff as of now. If you pushed your project with this file as is, you will end up with failures in your GitLab CI pipeline. Let’s break down what is happening or should eventually happen based on the above configuration.

image: "gitlab/dind"

The above line says that our default CI runner image will be Docker compatible. This means we’ll have access to Docker commands if we wanted them. You can use any Docker image you want, but just be familiar with what it contains versus what you need.

Next we have the following stages:

stages:

- build

- dockerize

- deploy

The above stages will run in the order that they are presented. So in our example, first the project will build, then it will do something with Docker, then it will deploy. The names of the stages can be anything, but it makes sense to give them a name related to what they’ll actually do.

So let’s look at the build stage:

build:

stage: build

image: "node:alpine"

before_script:

- 'which curl || ( apk update && apk add curl )'

- curl -L https://github.com/gohugoio/hugo/releases/download/v0.55.6/hugo_0.55.6_Linux-64bit.tar.gz | tar -xz && mv hugo /usr/local/bin/hugo

- npm install

script:

- npx gulp build

artifacts:

paths:

- public

In the above stage, you’ll notice that the default image is not being used. Every stage is a separate process, and the Docker image is quite heavy. If you are using heavy images and you don’t need them, your pipeline is going to take a long time to finish.

Before the main script starts, we can use the before_script to first update our image and install the necessary dependencies, which include Hugo. The image already has Node.js, so we’re safe from that perspective. Before moving into the main script, all of the NPM dependencies of our project are downloaded based on the package.json file at the root of the project. This includes the Gulp dependencies.

When all of the setup is done, the main script will run, which is the Gulp task that we saw previously. The final product should be the public directory which is being saved as an artifact so that way it can be used in the next step of the pipeline. Remember, each stage in the pipeline starts with a clean slate unless you tell it otherwise.

After the project is built, assuming no errors happened, the next stage happens:

dockerize:

stage: dockerize

before_script:

- docker login $CI_REGISTRY -u nraboy -p $CI_DOCKER_ACCESS_TOKEN

script:

- docker build -t $CI_REGISTRY/nraboy/www.thepolyglotdeveloper.com .

- docker push $CI_REGISTRY/nraboy/www.thepolyglotdeveloper.com

If you’ve enabled GitLab being a Docker registry, you can build a Docker image and push it to the registry. The $CI_REGISTRY variables are reserved variables by GitLab, but the $CI_DOCKER_ACCESS_TOKEN is something custom that we’ll define later. Essentially it is a password with write permissions, otherwise we wouldn’t be able to push the image to the registry.

Alright, let’s look at the final stage:

deploy:

image: "debian:buster"

stage: deploy

before_script:

- 'which ssh-agent || ( apt-get update -y && apt-get install openssh-client -y )'

- 'which rsync || ( apt-get update -y && apt-get install rsync -y )'

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY" | base64 --decode | tr -d '\r' | ssh-add - > /dev/null

- mkdir -p ~/.ssh

- chmod 700 ~/.ssh

- ssh-keyscan -t rsa HOST >> ~/.ssh/known_hosts

script:

- rsync -az public/ USER@HOST:PATH --delete

only:

- schedules

The deploy stage uses a different image. I’ve set this image to match what I’m using on my DigitalOcean VPS to avoid any kind of inconsistencies. In the before_script, the appropriate system packages are installed and my SSH private key is decoded and added to the SSH agent in the image. The private key is base64 encoded and exists as part of an $SSH_PRIVATE_KEY variable that we’ll define shortly. This prevents sensitive information from existing in our configuration.

There is a specific detail that took me quite some time to figure out.

When the CI runner tries to connect to your server for the first time it will be asked to accept the fingerprint. Since this is an automated process, you can’t exactly jump in and accept. For this reason, you have to do the following:

ssh-keyscan -t rsa HOST >> ~/.ssh/known_hosts

Make sure you swap HOST with the actual host of your server. In fact, don’t even bother using a CNAME, use the actual IP of your server. Once the before_script is done, you can use rsync to transfer the files from the public build artifact to your server. Make sure to swap out USER, HOST, and PATH with the actual information for your server and project.

As an optional step, you can define how the deploy stage is ran. Rather than deploying every time I pushed new code, I decided to only have it run through a scheduled GitLab process. This means, I can push code and the deploy stage does nothing, but in the GitLab dashboard, I can create a CRON schedule and this stage will run.

Configuring the Project on GitLab

In theory the Gulp workflow and the GitLab CI configuration is ready to go. We’re not quite done yet as we need to provide the variable information directly in GitLab as well as define a schedule so that way the project is automatically built daily without any human intervention.

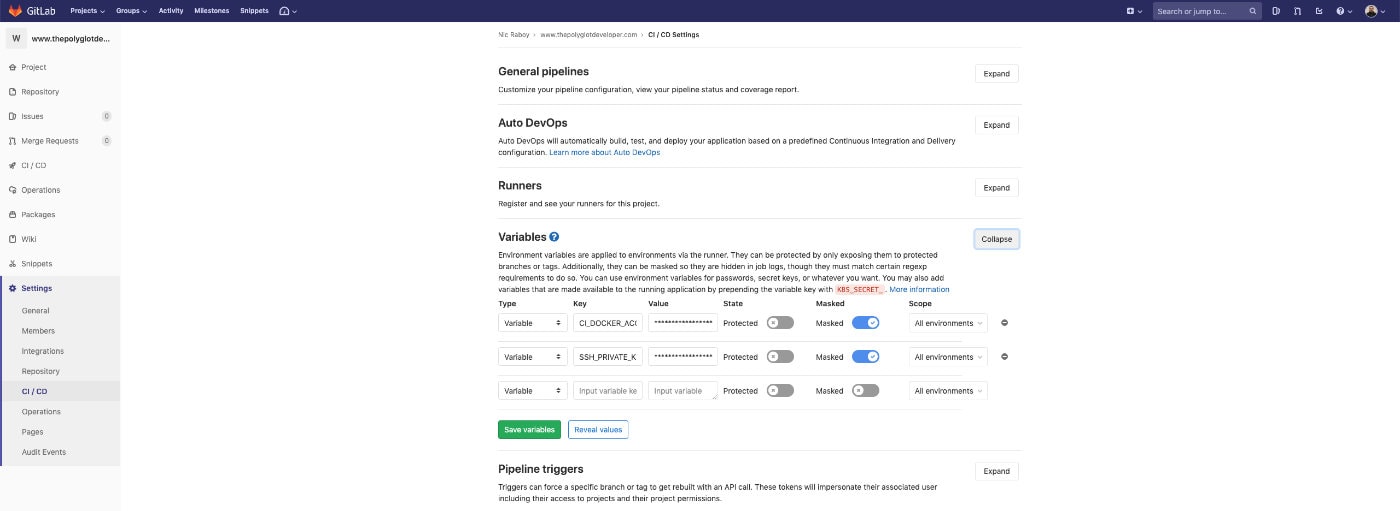

Within GitLab, navigate to the Settings -> CI / CD section of your project:

You should notice a Variables section and in it, the opportunity to add variables that can be accessed by your pipeline. Create a $CI_DOCKER_ACCESS_TOKEN variable and a $SSH_PRIVATE_KEY variable, both masked so they don’t appear in your logs.

For the private key, remember it has to be a base64 encoded string. Pasting your raw private key into the text box will not work. There are many ways to base64 encode a string. If you’re on a Mac, you can execute the following:

base64 -i <input-file> -o <output-file>

Just pass in the private key file and define an output file to contain the base64 version of it.

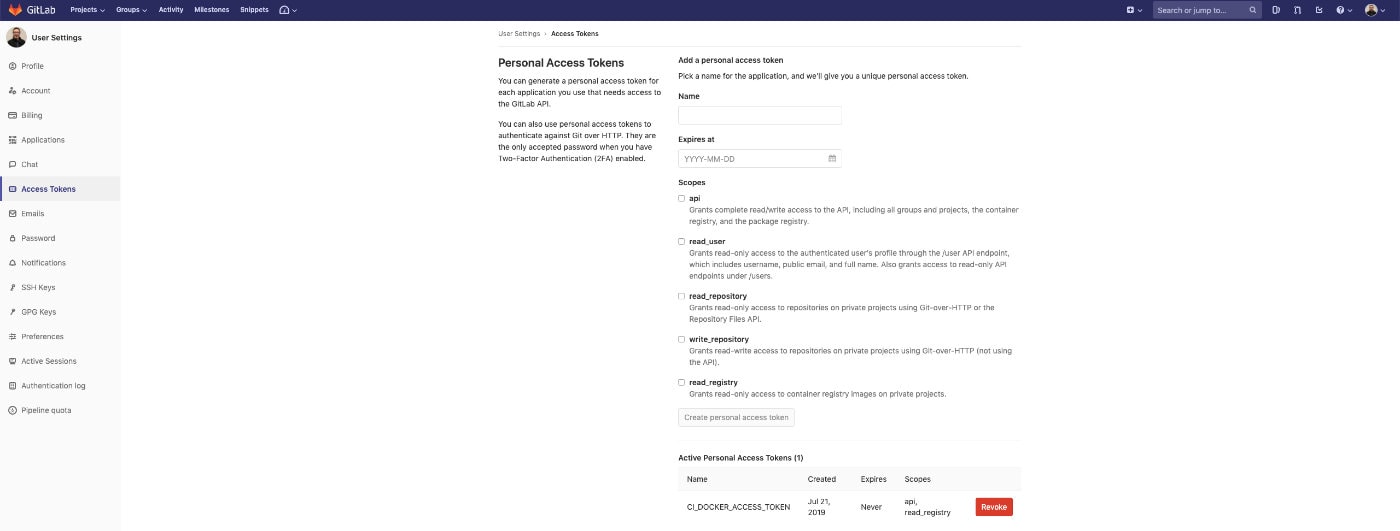

Now what do you add for the $CI_DOCKER_ACCESS_TOKEN variable? We actually need to navigate away to find this information. You need to go into the settings section of your user account and then click Access Tokens.

You need to create an access token that has api access and read_registry access. Again, the $CI_DOCKER_ACCESS_TOKEN stuff is only necessary if you want to build Docker images to be stored on GitLab along-side your project. After you define your personal access token, you can navigate back to the pipeline variables section and add it.

With the variables out of the way, a pipeline schedule can be created. As of now if you pushed your project to GitLab, it would be built and a Docker image would be created. If you don’t have the only tag in your configuration, it would also be deployed, but not on a schedule.

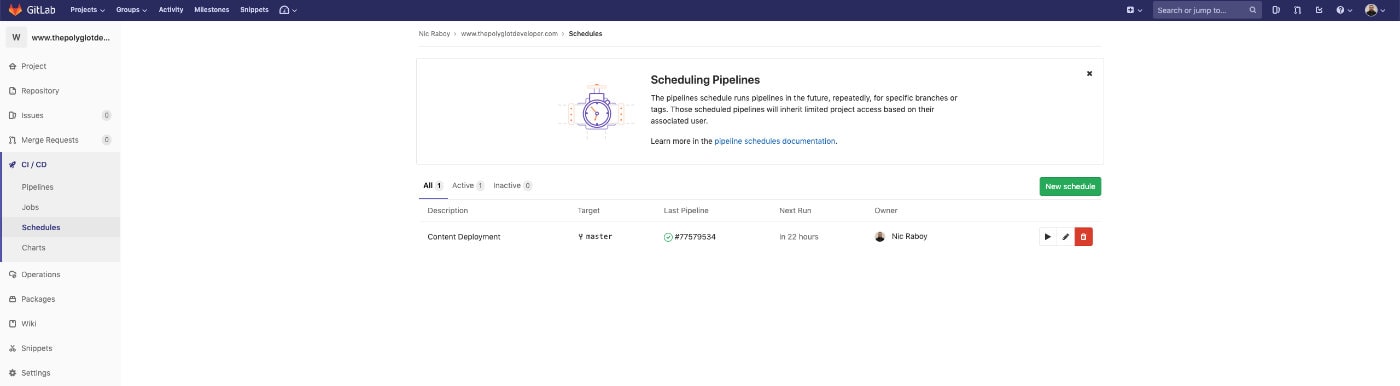

Within your project on GitLab, navigate to CI / CD -> Schedules to define one or more schedules.

Create a new schedule and define how frequently it should run on your project. If using my .gitlab-ci.yml example, the schedule will run all three stages. The deploy stage will not run without being ran on the schedule.

Conclusion

You just saw how I’m using GitLab CI to build and deploy The Polyglot Developer automatically. If you’re familiar with Hugo, you know you can write content with a future date that won’t be built until that date is met. This is where scheduling is beneficial because you won’t have to go back and build manually when the date comes around.

You might have noticed that while I am building a Docker image, I’m not actually using it yet. My DigitalOcean configuration is still using Apache httpd and isn’t quite Docker ready, but at least when that time comes, I’ll have the Docker process in place.

For more continuous integration and continuous deployment examples, check out my previous tutorial which focuses on a self-hosted version of GitLab and a Raspberry Pi.

This tutorial contains affiliate links.