Create An Amazon Alexa Skill Using Node.js And AWS Lambda

Recently I published my first skill for Amazon’s Alexa voice service called, BART Control. This skill used a variety of technologies and public APIs to become useful. In specific, I developed the skill with Node.js and the AWS Lambda service. However, what I mentioned is only a high level of what was done to make the Amazon Alexa skill possible. What must be done to get a functional skill that works on Amazon Alexa powered devices?

We’re going to see how to create a simple Amazon Alexa skill using Node.js and Lambda that works on various Alexa powered devices such as the Amazon Echo.

To be clear, I will not be showing you how to create the BART Control skill that I released as it is closed source. Instead we’ll be working on an even simple project, just to get your feet wet. We’ll explore more complicated skills in the future. The skill we’ll create will tell us something interesting upon request.

The Requirements

While we don’t need an Amazon Alexa powered device such as an Amazon Echo to make this tutorial a success, it certainly is a nice to have. My Amazon Echo is great!

Here are the few requirements that you must satisfy before continuing:

- Node.js 4.0 or higher

- An AWS account

Lambda is a part of AWS. While you don’t need Lambda to create a skill, Amazon has made it very convenient to use for this purpose. There is a free tier to AWS Lambda, so it should be relatively cheap, if not free. Lambda supports a variety of languages, but we’ll be using Node.js.

Building a Simple Node.js Amazon Alexa Skill

We need to create a new Node.js project. As a Node.js developer, you’re probably most familiar with Express Framework as it is a common choice amongst developers. Lambda does not use Express, but instead its own design.

Before we get too far ahead of ourselves, create a new directory somewhere on your computer. I’m calling mine, alexa-skill-the-polyglot and putting it on my desktop. Inside this project directory we need to create the following directories and files:

mkdir src

touch src/index.js

touch src/data.js

If your Command Prompt (Windows) or Terminal (Mac and Linux) doesn’t have the mkdir and touch commands, go ahead and create them manually.

We can’t start developing the Alexa skill yet. First we need to download the Alexa SDK for Node.js. To do this, execute the following form your Terminal or Command Prompt:

npm install alexa-sdk --save

The SDK makes development incredibly easy in comparison to what it was previously.

Before we start coding the handler file for our Lambda function, let’s come up with a dataset to be used. Inside the src/data.js file, add the following:

module.exports.java = [

"Java was first introduced to the world in 1995 by Sun Microsystems",

"Lambda expressions were first included in Java 8"

];

module.exports.ionic = [

"Ionic Framework is a hybrid mobile development framework that sits on top of Apache Cordova",

"The first version of Ionic Framework uses AngularJS where as the second version uses Angular",

"Ionic Framework lets you develop applications with HTML and JavaScript"

];

The above dataset is very simple. We will have two different scenarios. If the user asks about Java we have two possible responses. If the user asks about Ionic Framework, we have three possible responses. The goal here is to randomize these responses based on the technology the user requests information about.

Now we can take a look at the core logic file.

Open the project’s src/index.js file and include the following code. Don’t worry, we’re going to break everything down after.

var Alexa = require('alexa-sdk');

var Data = require("./data");

const skillName = "The Polyglot";

var handlers = {

"LanguageIntent": function () {

function getRandomInt(min, max) {

return Math.floor(Math.random() * (max - min)) + min;

}

var speechOutput = "";

if(this.event.request.intent.slots.Language.value && this.event.request.intent.slots.Language.value.toLowerCase() == "java") {

speechOutput = Data.java[getRandomInt(0, 2)];

} else if(this.event.request.intent.slots.Language.value && this.event.request.intent.slots.Language.value.toLowerCase() == "ionic framework") {

speechOutput = Data.ionic[getRandomInt(0, 3)];

} else {

speechOutput = "I don't have anything interesting to share regarding what you've asked."

}

this.emit(':tellWithCard', speechOutput, skillName, speechOutput);

},

"AboutIntent": function () {

var speechOutput = "The Polyglot Developer, Nic Raboy, is from San Francisco, California";

this.emit(':tellWithCard', speechOutput, skillName, speechOutput);

},

"AMAZON.HelpIntent": function () {

var speechOutput = "";

speechOutput += "Here are some things you can say: ";

speechOutput += "Tell me something interesting about Java. ";

speechOutput += "Tell me about the skill developer. ";

speechOutput += "You can also say stop if you're done. ";

speechOutput += "So how can I help?";

this.emit(':ask', speechOutput, speechOutput);

},

"AMAZON.StopIntent": function () {

var speechOutput = "Goodbye";

this.emit(':tell', speechOutput);

},

"AMAZON.CancelIntent": function () {

var speechOutput = "Goodbye";

this.emit(':tell', speechOutput);

},

"LaunchRequest": function () {

var speechText = "";

speechText += "Welcome to " + skillName + ". ";

speechText += "You can ask a question like, tell me something interesting about Java. ";

var repromptText = "For instructions on what you can say, please say help me.";

this.emit(':ask', speechText, repromptText);

}

};

exports.handler = function (event, context) {

var alexa = Alexa.handler(event, context);

alexa.appId = "amzn1.echo-sdk-ams.app.APP_ID";

alexa.registerHandlers(handlers);

alexa.execute();

};

So what exactly is happening in the above code?

The first thing we’re doing is including the Alexa Skill Kit and the dataset that we plan to use within our application. The handlers is where all the magic happens.

"LaunchRequest": function () {

var speechText = "";

speechText += "Welcome to " + skillName + ". ";

speechText += "You can ask a question like, tell me something interesting about Java. ";

var repromptText = "For instructions on what you can say, please say help me.";

this.emit(':ask', speechText, repromptText);

}

Alexa has a few different lifecycle events. You can manage when a session is started, when a session ends, and when the skill is launched. The LaunchRequest event is when a skill is specifically opened. For example:

Alexa, open The Polyglot

The above command will open the skill The Polyglot and trigger the LaunchRequest event. The other lifecycle events trigger based on usage. For example, a session may not end immediately. There are scenarios where Alexa may ask for more information and keep the session open until a response is given.

In any case, our simple skill will not make use of the session events.

In our LaunchRequest event, we tell the user what they can do and how they can get help. The ask function will keep the session open until a response is given. Based on the request given, a different set of commands will be executed further down in our code.

This brings us to the other handlers in our code.

If you’re not too familiar with how Lambda does things, you have intents that perform actions. You can have as many as you want, but they are triggered based on what Alexa detects in your phrases.

For example:

"AboutIntent": function () {

var speechOutput = "The Polyglot Developer, Nic Raboy, is from San Francisco, California";

this.emit(':tellWithCard', speechOutput, skillName, speechOutput);

}

If the AboutIntent is triggered, Alexa will respond with a card in the mobile application as well as spoken answers. We don’t know what triggers the AboutIntent yet, but we know that is a possible option.

"LanguageIntent": function () {

function getRandomInt(min, max) {

return Math.floor(Math.random() * (max - min)) + min;

}

var speechOutput = "";

if(this.event.request.intent.slots.Language.value && this.event.request.intent.slots.Language.value.toLowerCase() == "java") {

speechOutput = Data.java[getRandomInt(0, 2)];

} else if(this.event.request.intent.slots.Language.value && this.event.request.intent.slots.Language.value.toLowerCase() == "ionic framework") {

speechOutput = Data.ionic[getRandomInt(0, 3)];

} else {

speechOutput = "I don't have anything interesting to share regarding what you've asked."

}

this.emit(':tellWithCard', speechOutput, skillName, speechOutput);

}

The intent above is a bit more complicated. In the above intent we are expecting a parameter to be passed. These parameters are known as slot values and they are more variable to the user’s request.

If the user provides java as the parameter, the randomization function will get a Java response from our data file. This applies for ionic framework as well. If neither were used, we will default with some kind of error response.

In a production scenario you probably want to do a little better than just have two possible parameter options. For example, what if the user says ionic rather than ionic framework? Or what happens if Alexa interprets the user input as tonic rather than ionic? These are scenarios that you have to account for.

This brings us to the handler function that Lambda uses:

exports.handler = function (event, context) {

var alexa = Alexa.handler(event, context);

alexa.appId = "amzn1.echo-sdk-ams.app.APP_ID";

alexa.registerHandlers(handlers);

alexa.execute();

};

In this function we initialize everything. We define the application id, register all the handlers we just created, and execute them. Lambda recognizes this handler function as index.handler because our file is called index.js.

Let’s come up with those phrases that can trigger the intents in our application.

Creating a List of Sample Phrases Called Utterances

Amazon recommends you have as many phrases as you can possibly think up. During the deployment process to the Alexa Skill Store and AWS Lambda, you’ll need an utterances list.

For our example project, we might have the following utterances for the AboutIntent that we had created:

AboutIntent who developed this skill

AboutIntent tell me about the developer

AboutIntent give me information about the developer

AboutIntent who is the author

I listed four possible phrases, but I’m sure you can imagine that there are so many more possibilities. Try to think of everything the user might ask in order to trigger the AboutIntent code.

Things are a bit different when it comes to our other intent, LanguageIntent because there is an optional parameter. Utterances for this intent might look like the following:

LanguageIntent tell me something interesting about {Language}

LanguageIntent what can you tell me about {Language}

LanguageIntent anything interesting about the {Language} development technology

Notice my use of the {Language} placeholder. Alexa will fill in the gap and treat whatever falls into that slot as a parameter. That same parameter name will be used in the JavaScript code.

Again, it is very important to come up with every possible phrase. Not coming up with enough phrase possibilities will leave a poor user experience.

Can you believe the development portion is done? Now we can focus on the deployment of our Amazon Alexa skill.

Deploying the Skill to AWS Lambda

Deployment is a two part process. Not only do we need to deploy to AWS Lambda, but we also need to deploy to the Amazon Alexa Skill store.

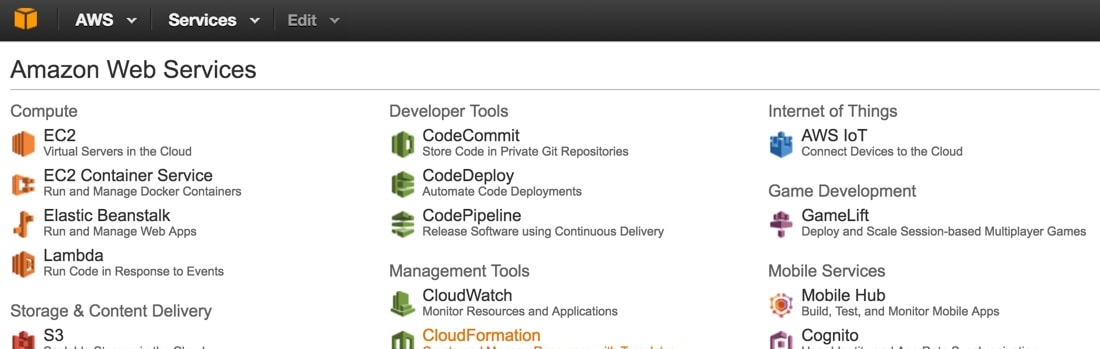

Starting with the AWS Lambda portion, log into your AWS account and choose to Lambda.

At this point we work towards creating a new Lambda function. As of right now, it is important that you choose Northern Virginia as your instance location. It is the only location that supports Alexa with Lambda. With that said, let’s go through the process.

Choose Create a Lambda function, but don’t select a blueprint from the list. Go ahead and continue. Choose Alexa Skills Kit as your Lambda trigger and proceed ahead.

On the next screen you’ll want to give your function a name and select to upload a ZIP archive of the project. The ZIP that you upload should only contain the two files that we created as well as the node_modules directory and nothing else. We also want to define the role to lambda_basic_execution.

After you upload we can proceed to linking the Lambda function to an Alexa skill.

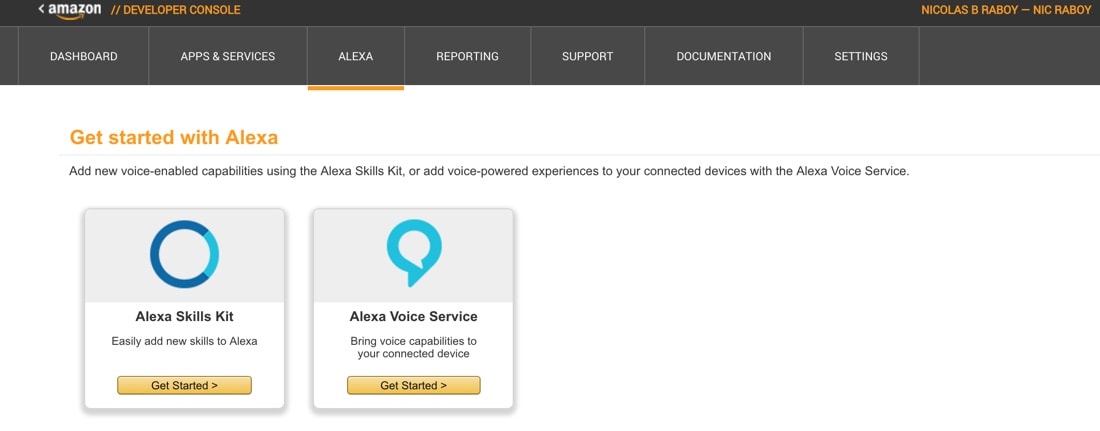

To create an Alexa skill you’ll want to log into the Amazon Developer Dashboard, which is not part of AWS. It is part of the Amazon App Store for mobile applications.

Once you are signed in, you’ll want to select Alexa from the tab list followed by Alexa Skills Kit.

From this area you’ll want to choose Add a New Skill and start the process. On the Skill Information page you’ll be able to obtain an application id after you save. This is the id that should be used in your application code.

In the Interaction Model we need to define an intent schema. For our sample application, it will look something like this:

{

"intents": [

{

"intent": "LanguageIntent",

"slots": [

{

"name": "Language",

"type": "LIST_OF_TECH"

}

]

},

{

"intent": "AboutIntent"

}

]

}

The slot type for our LanguageIntent is a list that we must define. Create a custom slot type and include the following possible entries:

ionic framework

java

Go ahead and paste the sample utterances that we had created previously.

In the Configuration section, use the ARN from the Lambda function that we created. It can be found in the AWS dashboard. Go ahead and fill out all other sections to the best of your ability.

Conclusion

You just saw how to create a very simple skill for Amazon’s Alexa voice assistant. While our skill only had two possible intent actions, one of the intents had parameters while the other did not. This skill was developed with Node.js and hosted on AWS Lambda. The user is able to ask about the author of the skill as well as information about Java or Ionic Framework.

A video version of this article can be seen below.