Building Amazon Alexa Skills With Node.js, Revisited

A little more than two years ago, when the Amazon Echo first started picking up steam and when I was first exposed to virtual assistants, I had written a tutorial around creating a Skill for Amazon Alexa using Node.js and simple JavaScript. In this tutorial titled, Create an Amazon Alexa Skill Using Node.js and AWS Lambda, we saw how to create intent functions and sample utterances in preparation for deployment on AWS Lambda. I later wrote a tutorial titled, Test Amazon Alexa Skills Offline with Mocha and Chai for Node.js, which focused on building unit tests for these Skills and their intent functions. Fast forward to now and a few things have changed in the realm of Skill development.

In this tutorial we’re going to see how to build a Skill for Alexa powered devices using Node.js and test it using popular frameworks and libraries such as Mocha and Chai.

While not Node.js, I wrote and published a Skill recently titled, Slick Dealer. After publishing the Skill, I wrote a tutorial around it titled, Build an Alexa Skill with Golang and AWS Lambda. As you can tell, that particular Skill made use of Golang. We’re going to create the same Skill, but this time we’re going to be using Node.js instead.

We’re going to be creating a Skill for one of my favorite deal hunting websites, Slickdeals:

What the Skill accomplishes will be quite simple. We’re going to request the latest deals with our voice and our Skill is going to consume the RSS feed for Slickdeals, parse it, then read back each of the deals in that feed.

Developing an AWS Lambda Function with Node.js to be Deployed as an Alexa Skill

There are multiple parts to any given Alexa Skill. Things to consider are the logic layer and the language comprehension layer. We’re going to start by designing the logic that will parse an RSS feed and construct a response that can be spoken.

Let’s start by creating a new Node.js project on our computer:

npm init -y

npm install ask-sdk --save

npm install request request-promise --save

npm install ssml-builder --save

npm install xml2js --save

The above commands will create a new project and install each of the project dependencies that are necessary for developing this application. The ask-sdk will allow us to work with properly formatted requests and then create properly formatted responses for Alexa. The request-promise will allow us to make HTTP requests to a remote service, in this case the RSS feed that we want to consume. Since the RSS feed is in XML format, we need to convert it to JSON with the xml2js package. Finally, we want to use the ssml-builder to manipulate how text is spoken. We’ll dive deeper into SSML as we progress in this tutorial.

With the project dependencies installed, we need to install our development dependencies which include what we need for testing our application offline. From within your project, execute the following:

npm install mocha --save-dev

npm install chai --save-dev

At this point in time we have all the necessary dependencies available to us. However, since we will be writing tests, our project should be structured in a certain way. Go ahead and create the following directories and files within your project:

mkdir src

mkdir test

touch src/main.js

touch test/about.js

touch test/frontpagedeals.js

If you don’t have the mkdir and touch commands within your operating system, go ahead and create the directories and files manually. All of our core logic will be in the projects src/main.js file and we’re going to have a test case for each of our possible interactions with Alexa.

With the project structure in place, add the following code to the src/main.js file:

const Alexa = require("ask-sdk-core");

const Request = require("request-promise");

const SSMLBuilder = require("ssml-builder");

const { parseString } = require("xml2js");

const ErrorHandler = {

canHandle(input) {

return true;

},

handle(input) {

return input.responseBuilder

.speak("Sorry, I couldn't understand what you asked. Please try again.")

.reprompt("Sorry, I couldn't understand what you asked. Please try again.")

.getResponse();

}

}

var skill;

exports.handler = async (event, context) => {

if(!skill) {

skill = Alexa.SkillBuilders.custom()

.addRequestHandlers(

// Handlers go here...

)

.addErrorHandlers(ErrorHandler)

.create();

}

var response = await skill.invoke(event, context);

return response;

};

So what is the above code doing? First we’re importing each of the project dependencies that we had previously downloaded. Next we are defining our error handler, which will kind of act as a blueprint for any additional handler to come. Essentially, in the ErrorHandler object, we are saying that the canHandle function always returns true. This means that the handler will always be accessible when called. The handle function is where the actual logic goes, and in this case, we are just building a simple response using the Alexa SDK. The response will contain a spoken response and it will reprompt if a valid request is not made.

The ErrorHandler object is never directly executed by Alexa or AWS Lambda. The exports.handler function is executed every time AWS Lambda is initiated for this particular function. In theory, an AWS Lambda function is just a single function. This means that we need to define dispatching logic so a single function request can route to appropriate code, hence the handlers.

If the skill is not defined, we can initialize the Alexa SDK and plug in our ErrorHandler which will be a catch all to bad requests. There are scenarios where the skill will be defined because AWS Lambda doesn’t always immediately kill its instances. By reusing what is available, we are being more efficient. When the Skill is invoked, we return the response to the client, being Alexa, so it can be spoken to the user.

So let’s create our first handler:

const AboutHandler = {

canHandle(input) {

return input.requestEnvelope.request.type === "IntentRequest" && input.requestEnvelope.request.intent.name === "AboutIntent";

},

handle(input) {

return input.responseBuilder

.speak("Slick Dealer was created by Nic Raboy in Tracy, California")

.withSimpleCard("About Slick Dealer", "Slick Dealer was created by Nic Raboy in Tracy, California")

.getResponse();

}

}

The AboutHandler will act as our request for information. As part of the dispatch process, the canHandle function will be called. If the request is an IntentRequest and the actual intent, which is defined by Alexa during the configuration, is an AboutIntent, then we know we should execute the handle function. If the criteria is not met, then we know the AboutHandler was not the desired request. In the handle function, we create a spoken response as well as a card to be displayed within the Alexa mobile application.

Not so bad right?

Alright, this is where it gets a little more complex. We need to consume data from Slickdeals and make a response from it. To do this, we should start by creating a function to get the data:

const getFrontpageDeals = async () => {

try {

var response = await Request({

uri: "https://slickdeals.net/newsearch.php",

qs: {

"mode": "frontpage",

"searcharea": "deals",

"searchin": "first",

"rss": "1"

},

json: false

});

return await new Promise((resolve, reject) => {

parseString(response, (error, result) => {

if(error) {

return reject(error);

}

resolve(result);

});

});

} catch (error) {

throw error;

}

}

The above function will make a request to the RSS feed. We know the response will be XML data, so we need to parse it into JSON. Working with callbacks are nasty so we wrap it in a promise and await for a resolution. The data we return from the getFrontpageDeals function should be a JSON format representation of the RSS feed.

More information on parsing XML into JSON can be found in a tutorial I wrote titled, Parse an XML Response with Node.js.

Now let’s put that getFrontpageDeals function to good use.

const FrontpageDealsHandler = {

canHandle(input) {

return input.requestEnvelope.request.type === "IntentRequest" && input.requestEnvelope.request.intent.name === "FrontpageDealsIntent";

},

async handle(input) {

try {

var builder = new SSMLBuilder();

var feedResponse = await getFrontpageDeals();

for(var i = 0; i < feedResponse.rss.channel[0].item.length; i++) {

builder.say(feedResponse.rss.channel[0].item[i].title[0]);

builder.pause("1s");

}

return input.responseBuilder

.speak(builder.ssml(true))

.getResponse();

} catch (error) {

throw error;

}

}

}

The above FrontpageDealsHandler follows the same rules as the previous handler. We first check to make sure the request is an intent to use the FrontpageDealsIntent and if it is, we can execute the handler function. You’ll notice we are formatting our response a little differently in comparison to the AboutHandler that we previously built. If we were to just loop through each of the feed items and concatenate them to be read, Alexa would read them way too fast. We could add a bunch of comma and period characters to slow it down, but that isn’t maintainable long term. Instead, we should make use of Speech Synthesis Markup Language (SSML) which allows us to define how text is spoken.

Using the package that we downloaded, we can start building the response. After getting our JSON data from the RSS feed, we loop through each of the items in that response. We plan to have Alexa speak each title in the feed. Using SSML, we can add a one second pause or break after every spoken phrase. This is an alternative to us adding a bunch of unnecessary punctuation.

After we’ve created our SSML response, we can return it as normal.

The last step towards our AWS Lambda logic is to connect our two handlers. Back in the exports.handler function, make it look like the following:

exports.handler = async (event, context) => {

if(!skill) {

skill = Alexa.SkillBuilders.custom()

.addRequestHandlers(

FrontpageDealsHandler,

AboutHandler

)

.addErrorHandlers(ErrorHandler)

.create();

}

var response = await skill.invoke(event, context);

return response;

};

Notice in the above code that we’ve just added the two handlers to the addRequestHandlers method. Technically, we could take this code and prepare it for deployment on Lambda. However, it might be a good idea to add test cases so we can test this offline, locally.

Open the project’s test/about.js file and include the following:

const { expect } = require("chai");

const main = require("../src/main");

describe("Testing a session with the AboutIntent", () => {

var speechResponse = null

var speechError = null

before(async () => {

try {

speechResponse = await main.handler({

"request": {

"type": "IntentRequest",

"intent": {

"name": "AboutIntent",

"slots": {}

},

"locale": "en-US"

},

"version": "1.0"

});

} catch (error) {

speechError = error;

}

})

describe("The response is structurally correct for Alexa Speech Services", function() {

it('should not have errored',function() {

expect(speechError).to.be.null

})

it('should have a version', function() {

expect(speechResponse.version).not.to.be.null

})

it('should have a speechlet response', function() {

expect(speechResponse.response).not.to.be.null

})

it("should have a spoken response", () => {

expect(speechResponse.response.outputSpeech).not.to.be.null

})

})

})

We’re using Mocha and Chai to make the above code possible. Given that we know the format of the requests taken in by Alexa, we can create a sample request to be used in our main.handler function, which is the exports.handler function in our src/main.js file. Notice that in our sample request, we are naming the intent after our AboutIntent which is checked for in our AboutHandler object. After executing the request, we can take a look at the response and validate that it meets certain criteria. The above code is a super simplistic example of us just making sure the response structure is correct, but you could make things more complex if you wanted.

Now take a look at the project’s test/frontpagedeals.js file:

const { expect } = require("chai");

const main = require("../src/main");

describe("Testing a session with the FrontpageDealsIntent", () => {

var speechResponse = null

var speechError = null

before(async () => {

try {

speechResponse = await main.handler({

"request": {

"type": "IntentRequest",

"intent": {

"name": "FrontpageDealsIntent",

"slots": {}

},

"locale": "en-US"

},

"version": "1.0"

});

} catch (error) {

speechError = error;

}

})

describe("The response is structurally correct for Alexa Speech Services", function() {

it('should not have errored',function() {

expect(speechError).to.be.null

})

it('should have a version', function() {

expect(speechResponse.version).not.to.be.null

})

it('should have a speechlet response', function() {

expect(speechResponse.response).not.to.be.null

})

it("should have a spoken response", () => {

expect(speechResponse.response.outputSpeech).not.to.be.null

expect(speechResponse.response.outputSpeech.ssml).not.to.be.null

})

})

})

You’ll notice it is the same as our previous test, but this time we’re referencing the FrontpageDealsIntent in our sample request. When it comes to production Alexa, we won’t have to manually define the intents because Alexa will determine that for us. However, once Alexa determines the correct intent, the steps are the same.

Deploying the Node.js Project to AWS Lambda

With the code ready to go, we need to deploy it on AWS Lambda so it can be connected to Alexa. However, deploying a Node.js project to Lambda isn’t as easy as copying the code and the package.json file. Instead, we need to bundle the code with all the dependencies, and then deploy it.

From the project root, execute the following command:

zip -r handler.zip ./node_modules/* ./src/main.js

The above command will recursively create an archive that includes all the node_modules contents as well as the src/main.js file. This ZIP archive will be deployed to AWS Lambda. It is important to note that if you end up using native dependencies, you have to go through more steps. Native dependencies are those that include C or C++ libraries designed for a particular operating system or architecture. In the event you have native dependencies, you can use an approach like listed in my tutorial titled, Deploying Native Node.js Dependencies on AWS Lambda.

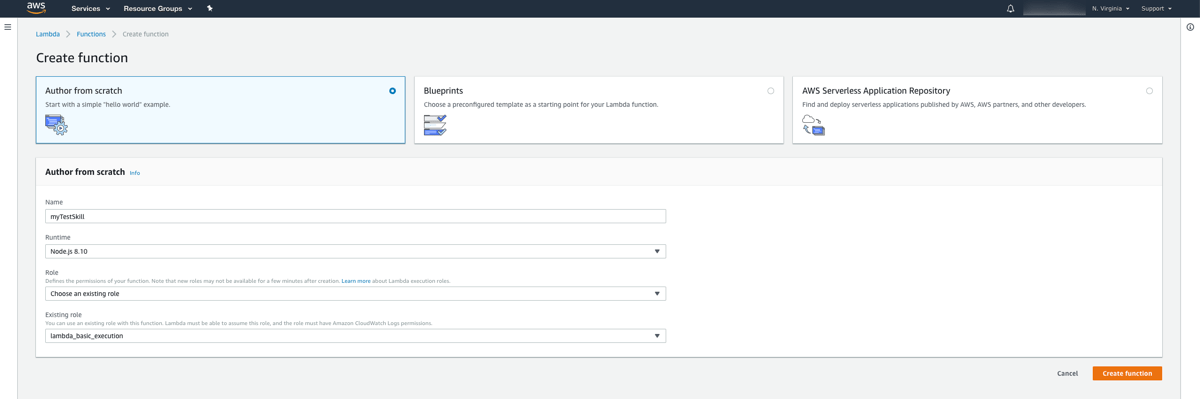

With the ZIP archive in hand, head to the AWS Console for Lambda to create a new function. You’ll want to create a function for Node.js and give it a name:

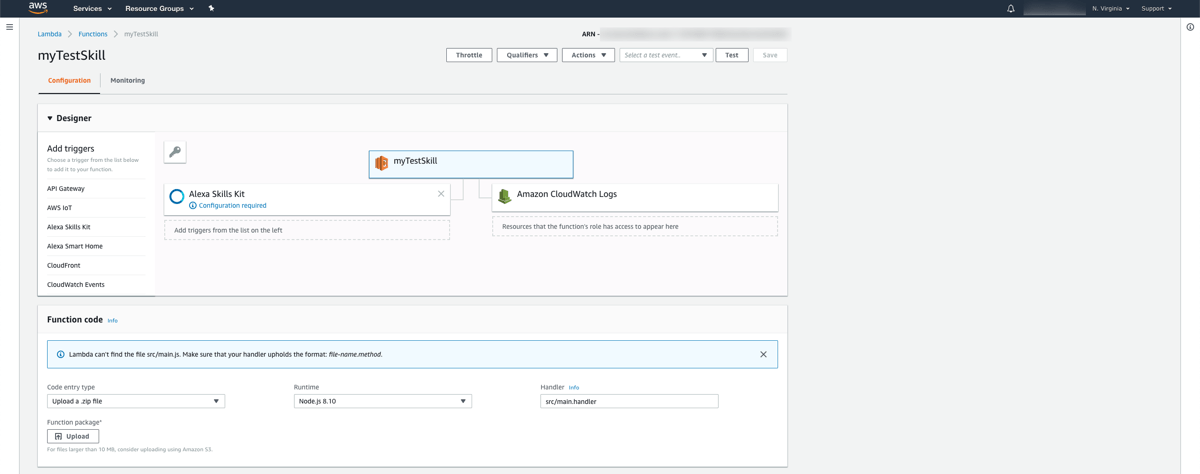

With the function create, you need to add an Alexa Skills Kit trigger. It will ask you for your Skill id, but as of now you don’t have one so go ahead and disable it. Basically, by adding a Skill id, it prevents third parties from being able to run your function.

When configuring your function, you’ll want to upload the ZIP archive you created and point to the handler function found at src/main.handler. If you wanted to, you could test this function on Lambda, but everything should be good as of now.

Configuring the Skill to Interact with AWS Lambda for Deployment

Now that we have the logic and the function created, we can start configuring how Alexa will interact with the project. Remember, AWS Lambda and Amazon Alexa are two very different things, so we need to connect the two.

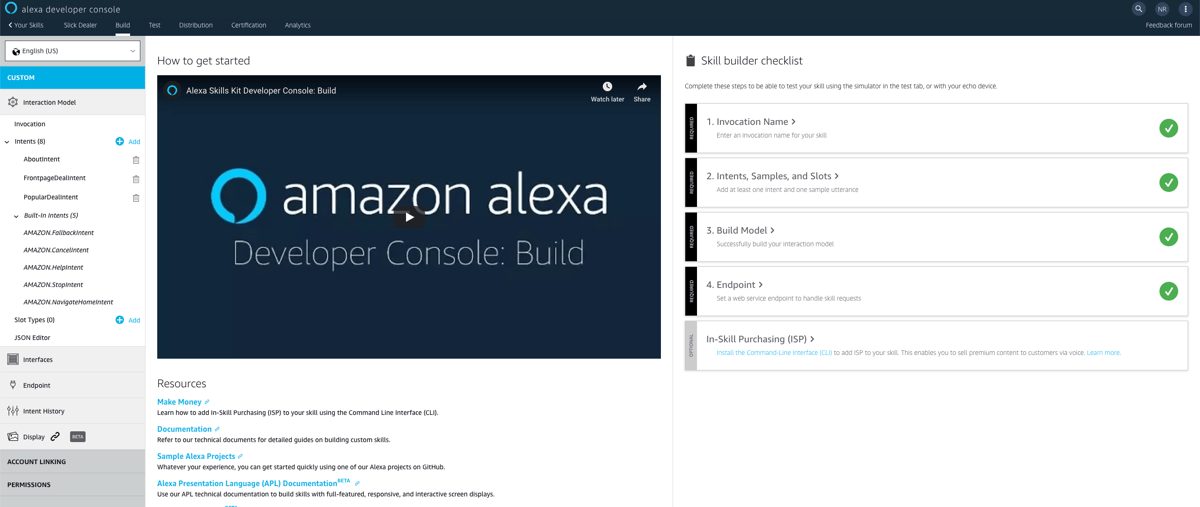

Go to your Amazon Alexa Developer Portal and choose to create a new Skill. You’ll want to give it a name and choose it to be custom as we won’t be leveraging any of the templates that Amazon offers.

After creating a Skill, you’ll see a dashboard with a checklist of activities to do before publishing.

First you’ll want to give your Skill an invocation name. This is the name that people will use when they wish to use your Skill. I encourage you to use real words that can be understood regardless of accent. Remember, Alexa has to make sense of what people ask it.

Next you’ll want to define the sample phrases, otherwise known as sample utterances. These teach Alexa how to respond and associate intents to spoke words. Remember, Alexa decides which intent is used within your handlers, and this is how Alexa decides. Each possible intent should receive its own set of sample phrases.

For example, the AboutIntent might have phrases like this:

tell me about this application

tell me about this skill

who made this application

who made this skill

The list could go on forever. The more sample phrases, the better the user experience. When it comes to the FrontpageDealsIntent you might have sample utterances that look like the following:

give me the front page deals

what are the front page deals

are there any front page deals

get me the front page deals

Again, the more combinations the better because it will help Alexa determine which intent you’re trying to execute within your AWS Lambda function.

When removing items from the publishing checklist, you’ll run into a step where you need to link your AWS Lambda function. Within the dashboard for Lambda, you can get the ARN id. With the ARN id, apply it in the Alexa dashboard so that Alexa knows what to call. Likewise, you can take the Alexa id and give it to the Lambda function to restrict access.

Conclusion

We just revisited how to create Amazon Alexa Skills using Node.js and AWS Lambda. Previously I had written about creating a Skill and then testing a Skill offline with Mocha, but this time around we were able to accomplish it in a lot cleaner of an approach.

The example we used in this tutorial was based on my Golang example titled, Build an Alexa Skill with Golang and AWS Lambda. I actually built it to take advantage of a promotion that was running to get me a free Echo Show device.